Microsoft Ignite 2023

79 TopicsSimplify IT management with Microsoft Copilot for Azure – save time and get answers fast

Today, we’re announcing Microsoft Copilot for Azure, an AI companion, that helps you design, operate, optimize, and troubleshoot your cloud infrastructure and services. Combining the power of cutting-edge large language models (LLMs) with the Azure Resource Model, Copilot for Azure enables rich understanding and management of everything that’s happening in Azure, from the cloud to the edge. The cloud management landscape is evolving rapidly, there are more end users, more applications, and more requirements demanding more capabilities from the infrastructure. The number of distinct resources to manage is rapidly increasing, and the nature of each of those resources is becoming more sophisticated. As a result, IT professionals take more time looking for information and are less productive. That’s where Copilot for Azure can help. Microsoft Copilot for Azure helps you to complete complex tasks faster, quickly discover and use new capabilities, and instantly generate deep insights to scale improvements broadly across the team and organization. In the same way GitHub Copilot, an AI companion for development, is helping developers do more in less time, Copilot for Azure will help IT professionals. Recent GitHub data shows that among developers who have used GitHub Copilot, 88 percent say they’re more productive, 77 percent say the tool helps them spend less time searching for information, and 74 percent say they can focus their efforts on more satisfying work. 1 Copilot for Azure helps you: Design: create and configure the services needed while aligning with organizational policies Operate: answer questions, author complex commands, and manage resources Troubleshoot: orchestrate across Azure services for insights to summarize issues, identify causes, and suggest solutions Optimize: improve costs, scalability, and reliability through recommendations for your environment Copilot is available in the Azure portal and will be available from the Azure mobile app and CLI in the future. Copilot for Azure is built to reason over, analyze, and interpret Azure Resource Manager (ARM), Azure Resource Graph (ARG), cost and usage data, documentation, support, best practice guidance, and more. Copilot accesses the same data and interfaces as Azure's management tools, conforming to the policy, governance, and role-based access control configured in your environment; all of this carried out within the framework of Azure’s steadfast commitment to safeguarding customer data security and privacy. Azure teams are continuously enhancing Copilot’s understanding of each service and capability, and every day, that understanding will help Copilot become even more helpful. Read on to explore some of the additional scenarios being used in Microsoft Copilot for Azure today. Learning Azure and providing recommendations Modern clouds offer a breadth of services and capabilities—and Copilot helps you learn about every service. It can also provide tailored recommendations for the services your workloads need. Insights are delivered directly to you in the management console, accompanied by links for further reading. Copilot is up to date with the latest Azure documentation, ensuring you’re getting the most current and relevant answers to your questions. Copilot navigates to the precise location in the portal needed to perform tasks. This feature speeds up the process from question to action. Copilot can also answer questions in context of the resources you’re managing, enabling you to ask about sizing, resiliency, or the tradeoffs between possible solutions. Understanding cloud environments The number and types of resources deployed in cloud environments are increasing. Copilot helps answer questions about a cloud environment faster and more easily. In addition to answering questions about your environment, Copilot also facilitates the construction of Kusto Query Language (KQL) queries for use within Azure Resource Graph. Whatever your experience level, Copilot accelerates the generation of insights about your Azure resources and their deployment environments. While baseline familiarity with the Kusto Query Language can be beneficial, Copilot is designed to assist users ranging from novices to experts in achieving their Azure Resource Graph objectives, anywhere in Azure portal. You can easily open generated queries with the Azure Resource Graph Explorer, enabling you to review generated queries to ensure they accurately reflect the intended questions. Optimizing cost and performance It’s critical for teams to get insights into spending, recommendations on how to optimize and predictive scenarios or “what-if” analyses. Copilot aids in understanding invoices, spending patterns, changes, and outliers, and it recommends cost optimizations. Copilot can help you better analyze, estimate, and optimize your cloud costs. For example, if you prompt Copilot with questions like, "Why did my cost spike on July 8?" or "Show me the subscriptions that costs the most," you’ll get an immediate response based on your usage, billing, and cost data. Copilot is integrated with the advanced AI algorithms in Application Insights Code Optimizations to detect CPU and memory usage performance issues at a code level and provides recommendations on how to fix them. In addition, Copilot helps you discover and triage available code recommendations for your .NET applications. Metrics-based insights Each resource in Azure offers a rich set of metrics available with Azure Monitor. Copilot can help you discover the available metrics for a resource, visualize and summarize the results, enable deeper exploration, and even perform anomaly detection to analyze unexpected changes and provide recommendations to address the issue. Copilot can also access data in Azure Monitor managed service for Prometheus, enabling the creation of PromQL queries. CLI scripting Azure CLI can manage all Azure resources from the command line and in scripts. With more than 9,000 commands and associated parameters. Copilot helps you easily and quickly identify the command and its parameters to carry out your specified operation. If a task requires multiple commands, Copilot generates a script aligned with Azure and scripting best practices. These scripts can be directly executed in the portal using Cloud Shell or copied for use in repeatable operations or automation. Support and troubleshooting When issues arise, quickly accessing the necessary information and assistance for resolution is critical. Copilot provides troubleshooting insight generated from Azure documentation and built-in, service-specific troubleshooting tools. Copilot will quickly provide step-by-step guidance for troubleshooting, while providing links to the right documentation. If more help is needed, Copilot will direct you to assisted support if requested. Copilot is also aware of service-specific diagnostics and troubleshooting tools to help you choose the perfect tool to assist you, whether it's related to high CPU usage, networking issues, getting a memory dump, scaling resources to support increased demand or more. Hybrid management IT estates are complex with many workloads run across datacenters, operational edge environments, like factories, and multicloud. Azure Arc creates a bridge between the Azure controls and tools, and those workloads. Copilot can also be used to design, operate, optimize, and troubleshoot Azure Arc-enabled workloads. Azure Arc facilitates the transfer of valuable telemetry and observability data flows back to Azure. This lets you swiftly address outages, reinstate services, and resolve root causes to prevent recurrences. Building responsibly Copilot for Azure is designed for the needs of the enterprise. Our efforts are guided by our AI principles and Responsible AI Standard and build on decades of research on grounding and privacy-preserving machine learning. Microsoft’s work on AI is reviewed for potential harms and mitigations by a multidisciplinary team of researchers, engineers, and policy experts. All of the features in Copilot are carried out within the Azure framework of safeguarding our customers’ data security and privacy. Copilot automatically inherits your organization’s security, compliance, and privacy policies for Azure. Data is managed in line with our current commitments. Copilot large language models are not trained on your tenant data. Copilot can only access data from Azure and perform actions against Azure when the current user has permission via role-based access control. All requests to Azure Resource Manager and other APIs are made on behalf of the user. Copilot does not have its own identity from a security perspective. When a user asks, ‘How many VMs do I have?’ the answer will be the same as if they went to Resource Graph Explorer and wrote / executed that query on their own. What’s next Microsoft Copilot for Azure is already being used internally by Microsoft employees and with a small group of customers. Today, we’re excited about the next step as we announce and launch the preview to you! Please click here to sign up. We’ll onboard customers into the preview on a weekly basis. In the coming weeks, we'll continuously add new capabilities and make improvements based on your feedback. Learn more Azure Ignite 2023 Infrastructure Blog Adaptive Cloud Microsoft Copilot for Azure Documentation 1 Research: quantifying GitHub Copilot’s impact on developer productivity and happiness, Eirini Kalliamvakou, GitHub. Sept. 7, 2022.138KViews21likes25CommentsGPT-4 Turbo with Vision on Azure OpenAI Service

GPT-4 Turbo with Vision on Azure OpenAI service is coming soon to public preview. It can analyze images and provide textual responses to questions about them. It incorporates both natural language processing and visual understanding. This integration allows Azure users to benefit from Azure's reliable cloud infrastructure and OpenAI's advanced AI research.57KViews10likes6CommentsAKS Welcomes you to Ignite 2023

Hi everyone, and welcome to Microsoft Ignite 2023! The AKS team is looking forward to connecting in person and virtually with all the AKS community, throughout the Ignite Keynotes, Breakouts, QA sessions, expert meetups or over a beverage in the hallways! The team has been hard at work making Azure the best platform to run Kubernetes and a truly Kubernetes-powered cloud. And over the last year Microsoft continued to leverage AKS as a tried and test platform for its critical workloads, putting a healthy amount of pressure on the service and continuing to help us push the boundaries of what’s possible with cloud native platforms and intelligent apps. As Kubernetes continues to become pervasive, a lot of teams find themselves at different steps of their adoption, skillset or learning stage. At Build 2023 we showed a prototype of an assistant for AKS that would make the perfect companion for any everyday task with AKS and Kubernetes. As part of our private preview, a lot of our users told us how great it would be if they could have that for all of Azure and today, we're happy to announce that all of those capabilities and more have been rolled into the new Microsoft Copilot for Azure. This AI companion will help you design, operate, optimize and troubleshoot any service and brings every integration we showed for AKS and much more, including new handlers for log collection or permissions validation. The Microsoft Copilot for Azure will be the perfect assistant for all teams at any stage of their AKS and cloud adoption journey! Sign up for the preview here! Improving resilience and uptime with simplified global footprint Nowadays, all industries, companies and solutions rely in one way or another on software and digital components, and more than ever users expect flawless services that never fail performing at a high level. This has increased a lot of the resiliency requirements of most of our existing and new users. One of the most common ways to increase availability and resilience is ensuring a global footprint across multiple regions and geographies, with the added value that you can serve your users closer to their locations while benefiting from protection in case anything goes wrong with one of the regions. However, this could increase the complexity of management and operations of multiple clusters across these regions, so we’re thrilled to announce that Azure Kubernetes Fleet Manager is now Generally Available, allowing you to create fleets of AKS clusters with a few clicks and easily distribute workloads across them while orchestrating operations like upgrades across them in a consistent manner. Fleet Manager is also very modular, allowing you to use exactly the functionality you want without requiring you to change your practices for scenarios you already solved for today. You can choose to use it with a hub (a fully managed hub cluster that controls things like namespace and workload placement without requiring any management) or hubless if you just want a central view of clusters and central management of operations like upgrades. As we wrapped KubeCon North America last week, 2 trends became very apparent as we talked with users and the community. The first one is how Kubernetes is uniquely positioned to power the AI revolution, providing a scalable, reliable and extensible platform that can meet the ever-changing needs of our users. The second was around the continued need for better cost visualization and optimization and more streamlined operations in order to reduce costs from all angles and allow users and business to focus on creating value for their business. These trends have long resonated with the team and we’re happy to show some of the latest things we’ve been working on in these areas. Kubernetes powering the AI revolution Today you can already use kubernetes in conjunction with some of Azure’s great AI services in order to very quickly and efficiently create intelligent applications that can scale and sustain any demand. However, many scenarios with privacy or customization requirements, for example, might need you to run/host your own model and customized inferencing. This brings a lot of challenges as you need to figure out how to containerize the models, host them, find capacity and the right GPUs for them, schedule them, provide endpoints so your apps can plug into them, etc. So to simplify this we’re happy to announce the AI Toolchain Operator addon for AKS, based on the open source KAITO project. This addon will drastically simplify the experience and number of steps to run an OSS model from dozens and many days/weeks of work, to just a couple steps and a few minutes. It will also assist you setting up an endpoint for your applications to consume so you can quickly integrate with new or existing apps. We’re looking forward to partnering with our existing preview customers and now with all users to continue to simplify and enrich this experience and provide further integrations with the Azure ecosystem. One of the key tenets of responsible AI is ensuring privacy concerns are addressed and respected. A few months ago, we demonstrated a prototype for Confidential Containers allowing you to run any workload leveraging confidential hardware capabilities without any code changes and we’re happy to announce that Kata Confidential Containers are now in public preview. Another important aspect is ensuring the provenance of your images to ensure that your supply chain doesn’t suffer from any tampering, so last month AKS announced Image Integrity, which allows you to sign any container image in ACR and validate its signature via policy on an AKS cluster, leveraging the Ratify open-source project. Visualize, reduce costs & streamline operations In our wider conjuncture, it’s of the utmost importance to ensure that teams’ infrastructure and operations are as efficient as possible and allow them to focus on their business outcomes. We’ve been focusing on 3 main areas: Cost Visualization: We’re announcing the cost analysis addon that allows direct integration of namespace and kubernetes assets billing with the Azure Cost Management portal. All of which builds on top of the open-source OpenCost project. Efficient scaling and cost reduction: For pod level scaling we’re very happy to announce the General Availability of KEDA (Kubernetes Event Driven Autoscaler) addon. For the infrastructure, one of the key pain points is knowing the best, cheapest and most readily available SKUs as well as ensure the most efficient usage of nodes by tightly binpacking pods/containers within them. So we’re very happy to be able to announce the Node Auto Provision addon, which leverage the open source Karpenter project to efficiently select the cheapest, highly available and most suited VM SKUs that allow for the most efficient bin-packing of your environments. Lighting fast and efficient container starts: We’re announcing Artifact Streaming for Linux, allowing faster image pulls/container starts of at least 15% (with many cases well over 50%) prioritizing the pull of the essential layers and using the containerd overlaybd project. System reserved optimization: The team has worked hard on optimizing the resource usage of the kubernetes system components, allowing that every node after Kubernetes 1.28 (now GA) will have 20% more allocatable space for workloads. Simplifying common operations: We’ve provided over 10 new enhancements for Virtual nodes allowing for many more bursting and serverless scenarios to be possible, from LB services integration, container probes, debug containers and exec/port-forward capabilities, bringing a lot more parity to native node capabilities to this option. Additionally, for one of the most common tasks for applications, setting up routing (ingress, DNS, certificates), we’re making the Application Routing addon generally available, so you can have a fully managed and scalable bundle of all those capabilities delivered out of the box by AKS. The are some of the latest things the teams have been working on, with many more available on the AKS Release Notes and throughout our announcements. We can’t wait to meet you and chat about these or many other of our announcements and discuss what’s coming next and how we can help you achieve even more. Make sure to follow our roadmap for what’s coming and the AKS community for deep dive content on AKS.9.5KViews9likes0CommentsAnnouncing: Microsoft moves $25 Billion in credit card transactions to Azure confidential computing

Microsoft is proud to showcase that customers in the financial sector can rely on public Azure to add confidentiality to provide secure and compliant payment solutions that meet or exceed industry standards. Microsoft is committed to hosting 100% of our payment services on Azure, just as we would expect our customers to do. Microsoft’s Commerce Financial Services (CFS) has completed a critical milestone by deploying a level 1 Payment Card Industry Data Security Standard (PCI-DSS) compliant credit card processing and vaulting solution, moving $25 Billion in annual credit card transactions to the public Azure cloud.Azure AI Speech launches Personal Voice in preview

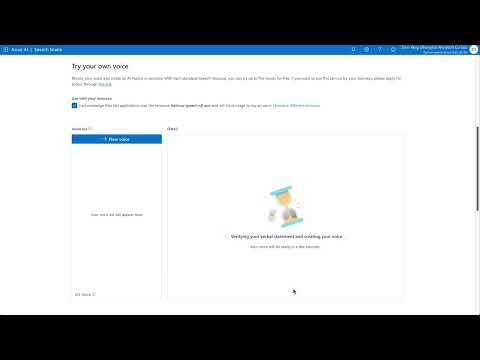

Today at Ignite 2023 conference, Microsoft is taking customization one step further with its new 'Personal Voice' feature. This innovation is specifically designed to enable customers to build apps that allow their users to easily create their own AI voice, resulting in a fully personalized voice experience. 28KViews6likes3Comments

28KViews6likes3Comments