Microsoft Ignite 2023

79 TopicsSimplify IT management with Microsoft Copilot for Azure – save time and get answers fast

Today, we’re announcing Microsoft Copilot for Azure, an AI companion, that helps you design, operate, optimize, and troubleshoot your cloud infrastructure and services. Combining the power of cutting-edge large language models (LLMs) with the Azure Resource Model, Copilot for Azure enables rich understanding and management of everything that’s happening in Azure, from the cloud to the edge. The cloud management landscape is evolving rapidly, there are more end users, more applications, and more requirements demanding more capabilities from the infrastructure. The number of distinct resources to manage is rapidly increasing, and the nature of each of those resources is becoming more sophisticated. As a result, IT professionals take more time looking for information and are less productive. That’s where Copilot for Azure can help. Microsoft Copilot for Azure helps you to complete complex tasks faster, quickly discover and use new capabilities, and instantly generate deep insights to scale improvements broadly across the team and organization. In the same way GitHub Copilot, an AI companion for development, is helping developers do more in less time, Copilot for Azure will help IT professionals. Recent GitHub data shows that among developers who have used GitHub Copilot, 88 percent say they’re more productive, 77 percent say the tool helps them spend less time searching for information, and 74 percent say they can focus their efforts on more satisfying work. 1 Copilot for Azure helps you: Design: create and configure the services needed while aligning with organizational policies Operate: answer questions, author complex commands, and manage resources Troubleshoot: orchestrate across Azure services for insights to summarize issues, identify causes, and suggest solutions Optimize: improve costs, scalability, and reliability through recommendations for your environment Copilot is available in the Azure portal and will be available from the Azure mobile app and CLI in the future. Copilot for Azure is built to reason over, analyze, and interpret Azure Resource Manager (ARM), Azure Resource Graph (ARG), cost and usage data, documentation, support, best practice guidance, and more. Copilot accesses the same data and interfaces as Azure's management tools, conforming to the policy, governance, and role-based access control configured in your environment; all of this carried out within the framework of Azure’s steadfast commitment to safeguarding customer data security and privacy. Azure teams are continuously enhancing Copilot’s understanding of each service and capability, and every day, that understanding will help Copilot become even more helpful. Read on to explore some of the additional scenarios being used in Microsoft Copilot for Azure today. Learning Azure and providing recommendations Modern clouds offer a breadth of services and capabilities—and Copilot helps you learn about every service. It can also provide tailored recommendations for the services your workloads need. Insights are delivered directly to you in the management console, accompanied by links for further reading. Copilot is up to date with the latest Azure documentation, ensuring you’re getting the most current and relevant answers to your questions. Copilot navigates to the precise location in the portal needed to perform tasks. This feature speeds up the process from question to action. Copilot can also answer questions in context of the resources you’re managing, enabling you to ask about sizing, resiliency, or the tradeoffs between possible solutions. Understanding cloud environments The number and types of resources deployed in cloud environments are increasing. Copilot helps answer questions about a cloud environment faster and more easily. In addition to answering questions about your environment, Copilot also facilitates the construction of Kusto Query Language (KQL) queries for use within Azure Resource Graph. Whatever your experience level, Copilot accelerates the generation of insights about your Azure resources and their deployment environments. While baseline familiarity with the Kusto Query Language can be beneficial, Copilot is designed to assist users ranging from novices to experts in achieving their Azure Resource Graph objectives, anywhere in Azure portal. You can easily open generated queries with the Azure Resource Graph Explorer, enabling you to review generated queries to ensure they accurately reflect the intended questions. Optimizing cost and performance It’s critical for teams to get insights into spending, recommendations on how to optimize and predictive scenarios or “what-if” analyses. Copilot aids in understanding invoices, spending patterns, changes, and outliers, and it recommends cost optimizations. Copilot can help you better analyze, estimate, and optimize your cloud costs. For example, if you prompt Copilot with questions like, "Why did my cost spike on July 8?" or "Show me the subscriptions that costs the most," you’ll get an immediate response based on your usage, billing, and cost data. Copilot is integrated with the advanced AI algorithms in Application Insights Code Optimizations to detect CPU and memory usage performance issues at a code level and provides recommendations on how to fix them. In addition, Copilot helps you discover and triage available code recommendations for your .NET applications. Metrics-based insights Each resource in Azure offers a rich set of metrics available with Azure Monitor. Copilot can help you discover the available metrics for a resource, visualize and summarize the results, enable deeper exploration, and even perform anomaly detection to analyze unexpected changes and provide recommendations to address the issue. Copilot can also access data in Azure Monitor managed service for Prometheus, enabling the creation of PromQL queries. CLI scripting Azure CLI can manage all Azure resources from the command line and in scripts. With more than 9,000 commands and associated parameters. Copilot helps you easily and quickly identify the command and its parameters to carry out your specified operation. If a task requires multiple commands, Copilot generates a script aligned with Azure and scripting best practices. These scripts can be directly executed in the portal using Cloud Shell or copied for use in repeatable operations or automation. Support and troubleshooting When issues arise, quickly accessing the necessary information and assistance for resolution is critical. Copilot provides troubleshooting insight generated from Azure documentation and built-in, service-specific troubleshooting tools. Copilot will quickly provide step-by-step guidance for troubleshooting, while providing links to the right documentation. If more help is needed, Copilot will direct you to assisted support if requested. Copilot is also aware of service-specific diagnostics and troubleshooting tools to help you choose the perfect tool to assist you, whether it's related to high CPU usage, networking issues, getting a memory dump, scaling resources to support increased demand or more. Hybrid management IT estates are complex with many workloads run across datacenters, operational edge environments, like factories, and multicloud. Azure Arc creates a bridge between the Azure controls and tools, and those workloads. Copilot can also be used to design, operate, optimize, and troubleshoot Azure Arc-enabled workloads. Azure Arc facilitates the transfer of valuable telemetry and observability data flows back to Azure. This lets you swiftly address outages, reinstate services, and resolve root causes to prevent recurrences. Building responsibly Copilot for Azure is designed for the needs of the enterprise. Our efforts are guided by our AI principles and Responsible AI Standard and build on decades of research on grounding and privacy-preserving machine learning. Microsoft’s work on AI is reviewed for potential harms and mitigations by a multidisciplinary team of researchers, engineers, and policy experts. All of the features in Copilot are carried out within the Azure framework of safeguarding our customers’ data security and privacy. Copilot automatically inherits your organization’s security, compliance, and privacy policies for Azure. Data is managed in line with our current commitments. Copilot large language models are not trained on your tenant data. Copilot can only access data from Azure and perform actions against Azure when the current user has permission via role-based access control. All requests to Azure Resource Manager and other APIs are made on behalf of the user. Copilot does not have its own identity from a security perspective. When a user asks, ‘How many VMs do I have?’ the answer will be the same as if they went to Resource Graph Explorer and wrote / executed that query on their own. What’s next Microsoft Copilot for Azure is already being used internally by Microsoft employees and with a small group of customers. Today, we’re excited about the next step as we announce and launch the preview to you! Please click here to sign up. We’ll onboard customers into the preview on a weekly basis. In the coming weeks, we'll continuously add new capabilities and make improvements based on your feedback. Learn more Azure Ignite 2023 Infrastructure Blog Adaptive Cloud Microsoft Copilot for Azure Documentation 1 Research: quantifying GitHub Copilot’s impact on developer productivity and happiness, Eirini Kalliamvakou, GitHub. Sept. 7, 2022.138KViews21likes25CommentsWelcoming Mistral, Phi, Jais, Code Llama, NVIDIA Nemotron, and more to the Azure AI Model Catalog

We are excited to announce the addition of several new foundation and generative AI models to the Azure AI model catalog. From Hugging Face, we have onboarded a diverse set of stable diffusion models, falcon models, CLIP, Whisper V3, BLIP, and SAM models. In addition to Hugging Face models, we are adding Code Llama and Nemotron models from Meta and NVIDIA respectively. We are also introducing our cutting-edge Phi models from Microsoft research. These exciting additions to the model catalog have resulted in 40 new models and 4 new modalities including text-to-image and image embedding. Today, we are also pleased to announce Models as a Service. Pro developers will soon be able to easily integrate the latest AI models such as Llama 2 from Meta, Command from Cohere, Jais from G42, and premium models from Mistral as an API endpoint to their applications. They can also fine-tune these models with their own data without needing to worry about setting up and managing the GPU infrastructure, helping eliminate the complexity of provisioning resources and managing hosting. Below is additional information about the incredible new models we are bringing to Models as a Service and to the Azure AI model catalog. New Models in Models as a Service (MaaS) Command (Coming Soon) Command is Cohere's premier text generation model, designed to respond effectively to user commands and instantly cater to practical business applications. It offers a range of default functionalities but can be customized for specific company language or advanced use cases. Command's capabilities include writing product descriptions, drafting emails, suggesting press release examples, categorizing documents, extracting information, and answering general queries. We will soon support Command in MaaS. Jais (Coming Soon) Jais is a 13-billion parameter model developed by G42 and trained on a diverse 395-billion-token dataset, comprising 116 billion Arabic and 279 billion English tokens. Notably, Jais is trained on the Condor Galaxy 1 AI supercomputer, a multi-exaFLOP AI supercomputer co-developed by G42 and Cerebras Systems. This model represents a significant advancement for the Arabic world in AI, offering over 400 million Arabic speakers the opportunity to explore the potential of generative AI. Jais will also be offered in MaaS as inference APIs and hosted fine-tuning. Mistral Mistral is a Large Language Model with 7.3 billion parameters. It is trained on data that is able to generate coherent text and perform various natural language processing tasks. It is a significant leap from previous models and outperforms many existing AI models on a variety of benchmarks. One of the key features of the Mistral 7B model is its use of grouped query attention and sliding window attention, which allow for faster inference and longer response sequences. Azure AI model catalog will soon offer Mistral’s premium models in Model-as-a-Service (MaaS) through inference APIs and hosted-fine-tuning. Mistral-7B-V01 Mistral-7B-Instruct-V01 New Models in Azure AI Model Catalog Phi Phi-1-5 is a Transformer with 1.3 billion parameters. It was trained using the same data sources as Phi-1, augmented with a new data source that consists of various NLP synthetic texts. When assessed against benchmarks testing common sense, language understanding, and logical reasoning, Phi-1.5 demonstrates a nearly state-of-the-art performance among models with fewer than 10 billion parameters. Phi-1.5 can write poems, draft emails, create stories, summarize texts, write Python code (such as downloading a Hugging Face transformer model), etc. Phi-2 is a Transformer with 2.7 billion parameters that shows dramatic improvement in reasoning capabilities and safety measures compared to Phi-1-5, however it remains relatively small compared to other transformers in the industry. With the right fine-tuning and customization, these SLMs are incredibly powerful tools for applications both on the cloud and on the edge. Phi 1.5 Phi 2 Whisper V3 Whisper is a Transformer based encoder-decoder model, also referred to as a sequence-to-sequence model. It was trained on 1 million hours of weakly labeled audio and 4 million hours of pseudo labeled audio collected using Whisper large-v2. The models were trained on either English-only data or multilingual data. The English-only models were trained on the task of speech recognition. The multilingual models were trained in both speech recognition and speech translation. For speech recognition, the model predicts transcriptions in the same language as the audio. For speech translation, the model predicts transcriptions to a different language to the audio. OpenAI-Whisper-Large-V3 BLIP BLIP (Bootstrapping Language-Image Pre-training) is a model that is able to perform various multi-modal tasks including: Visual Question Answering, Image-Text retrieval (Image-text matching), Image Captioning. Created by Salesforce, the BLIP model is based on the concept of vision-language pre-training (VLP), which combines pre-trained vision models and large language models (LLMs) for vision-language tasks. BLIP effectively utilizes the noisy web data by bootstrapping the captions, where a captioner generates synthetic captions and a filter removes the noisy ones. It achieves state-of-the-art results on a wide range of vision-language tasks, such as image-text retrieval, image captioning, and VQA. The following variants are available in the model catalog: Salesforce-BLIP-VQA-Base Salesforce-BLIP-Image-Captioning-Base Salesforce-BLIP-2-OPT-2-7b-VQA. Salesforce-BLIP-2-OPT-2-7b-Image-To-Text. CLIP CLIP (Contrastive Language-Image Pre-Training) is a neural network trained on a variety of image-text pairs and created by OpenAI for efficiently learning visual concepts from natural language supervision. CLIP can be applied to any visual classification benchmark by simply providing the names of the visual categories to be recognized, similar to the “zero-shot” capabilities of GPT-2 and GPT-3. CLIP can also be used to extract visual and text embeddings for use in downstream tasks (such as information retrieval). Including this model increases our ever-growing list of other OpenAI models available in the model catalog including GPT and Dall-E. As mentioned earlier, these Azure Machine Learning curated models are thoroughly tested. The available CLIP variants include: OpenAI-CLIP-Image-Text-Embeddings-ViT-Base-Patch32 OpenAI-CLIP-ViT-Base-Patch32 OpenAI-CLIP-ViT-Large-Patch14 Code Llama As a result of the partnership between Microsoft and Meta, we are delighted to offer the new Code Llama model and its variants in the Azure AI model catalog. Code Llama is a collection of pretrained and fine-tuned generative text models ranging in scale from 7 billion to 34 billion parameters. Code Llama is state-of-the-art for LLMs on code tasks and has the potential to make workflows faster and more efficient for current developers and lower the barrier to entry for people who are learning to code. Code Llama has the potential to be used as a productivity and educational tool to help programmers write more robust, well-documented software. The available Code Llama variants include: CodeLlama-34b-Python CodeLlama-34b-Instruct CodeLlama-13b CodeLlama-13b-Python CodeLlama-13b-Instruct CodeLlama-7b CodeLlama-7b-Python CodeLlama-7b-Instruct Falcon models The next set of models were created by the Technical Innovation Institute (TII). Falcon-7B is a large language model with 7 billion parameters and Falcon-40B with 40 billion parameters. It is a causal decoder-only model developed by TII and trained on 1,500 billion tokens and 1 trillion tokens of RefinedWeb dataset respectively, which was enhanced with curated corpora. The model is available under the Apache 2.0 license. It outperforms comparable open-source models and features an architecture optimized for inferencing. The Falcon variants include: Falcon-40b Falcon-40b-Instruct Falcon-7b-Instruct Falcon-7b NVIDIA Nemotron Another additional update is the launch of the new NVIDIA AI collection of models and registry. This partnership is also where NVIDIA is launching a new 8B LLM, titled Nemotron-3, in 3 variants pretrained, chat and Q&A. Nemotron-3, which is a family of enterprise ready GPT-based decoder-only generative text models compatible with NVIDIA NeMo Framework. Nemotron-3-8B-Base-4k Nemotron-3-8B-Chat-4k-SFT Nemotron-3-8B-Chat-4k-RLHF Nemotron-3-8B-Chat-4kSteerLM Nemotron-3-8B-QA-4k SAM The Segment Anything Model (SAM) is an innovative image segmentation tool capable of creating high-quality object masks from simple input prompts. Trained on a massive dataset comprising 11 million images and 1.1 billion masks, SAM demonstrates strong zero-shot capabilities, effectively adapting to new image segmentation tasks without prior specific training. Created by Meta, the model's impressive performance matches or exceeds prior models that operated under full supervision. Facebook-Sam-Vit-Large Facebook-Sam-Vit-Huge Facebook-Sam-Vit-Base Stable Diffusion Models The latest additions to the model catalog include Stable Diffusion models for text-to-image and inpainting tasks, developed by Stability AI and CompVis. These cutting-edge models offer a remarkable advancement in generative AI, providing greater robustness and consistency in generating images from text descriptions. By incorporating these Stable Diffusion models into our catalog, we are enhancing the diversity of available modalities and models, enabling users to access state-of-the-art capabilities that open new possibilities for creative content generation, design, and problem-solving. The addition of Stable Diffusion models in the Azure AI model catalog reflects our commitment to offering the most advanced and stable AI models to empower data scientists and developers in their machine learning projects, apps, and workflows. The available Stable Diffusion models include: Stable-Diffusion-V1-4 Stable-Diffusion-2-1 Stable-Diffusion-V1-5 Stable-Diffusion-Inpainting Stable-Diffusion-2-Inpainting Curated Model Catalog Inference Optimizations In addition to the above curated AI models, we also wanted to improve the overall user experience by optimizing the catalog and its features in meaningful ways. Models on the Azure AI model catalog are powered by a custom inferencing container to cater to the growing demand for high performance inference and serving of foundation models. The container comes equipped with multiple backend inferencing engines, including vLLM, DeepSpeed-FastGen and Hugging Face, to cover a wide variety of model architectures. Our default choice for serving models is vLLM, which provides high throughput and efficient memory management with continuous batching and Paged Attention. We are also excited to support DeepSpeed-FastGen - the latest offering by DeepSpeed team, which introduces a Dynamic SplitFuse technique to offer even higher throughput. You can try out the alpha version of DeepSpeed-FastGen with our Llama-2 family of models. Learn more about DeepSpeed-FastGen from here: https://github.com/microsoft/DeepSpeed/tree/master/blogs/deepspeed-fastgen. For models that can’t be served by vLLM or DeepSpeed-MII, the container also comes with Hugging Face engine. To further maximize GPU utilization and achieve even higher throughput, the container strategically deploys multiple model replicas based on the available hardware and routes the incoming requests to available replicas. This allows efficient serving of even more concurrent user requests. Additionally, we integrated Azure AI Content Safety to streamline the process of detecting potentially harmful content in AI-generated applications and services. This integration aims to enforce responsible AI practices and safe usage of our advanced AI models. You can start seeing benefits of using our container with Llama-2 family of models. We plan to extend support to even more models, including other modalities like Stable Diffusion and Falcon. To get started with model inferencing, view the “Learn more” section below. https://github.com/Azure/azureml-examples/blob/main/sdk/python/foundation-models/system/inference/text-generation/llama-safe-online-deployment.ipynb Fine-tuning Optimizations Training larger LLMs such as those with 70B parameters and above needs a lot of GPU memory and can run out of memory during fine-tuning, and sometimes even loading them is not possible if the GPU memory is small. This is exacerbated further for most real-life use cases where we need context lengths that are as close to the model’s maximum allowed context length, which pushes memory requirement even further. To solve this problem, we are excited to provide users some of the latest optimizations for fine-tuning – Low Rank Adaptation (LoRA), DeepSpeed ZeRO, and Gradient Checkpointing. Gradient Checkpointing lowers GPU memory requirement by storing only select activations computed during the forward pass and recomputing them during the backward pass. This is known to reduce GPU memory by a factor of sqrt(n) (where n is the number of layers) while adding a modest additional computational cost from recomputing some activations. LoRA freezes most of the model parameters in the pretrained model during fine-tuning, and only modifies a small fraction of weights (LoRA adapters). This reduces GPU memory required, and also reduces fine-tuning time. LoRA reduces the number of trainable parameters by orders of magnitude, without much impact on the quality of the fine-tuned model. DeepSpeed’s Zero Redundancy Optimizer (ZeRO) achieves the merits of data and model parallelism, while alleviating the limitations of both. DeepSpeed ZeRO has three stages which partition the model states – parameters, gradients, and optimizer states – across GPUs and uses a dynamic communication schedule to share the necessary model states across GPUs. The GPU memory reduction allows users to fine-tune LLMs like LLAMA-2-70B with a single node of 8xV100s, for typical sequence lengths of the data encountered in many use cases. All these optimizations are orthogonal and can be used together in any combination, empowering our customers to train large models on multi-GPU clusters with mixed precision to get the best fine-tuning accuracy. AI safety and Responsible AI Responsible AI is at the heart of Microsoft’s approach to AI and how we partner. For years we’ve invested heavily in making Azure the place for responsible, cutting-edge AI innovation, whether customers are building their own models or using pre-built and customizable models from Microsoft, Meta, OpenAI and the open-source ecosystem. We are thrilled to announce that Stable Diffusion models now support Azure AI Content Safety. Azure AI Content Safety detects harmful user-generated and AI-generated content in applications and services. Content Safety includes text and image APIs that allow you to detect material that is harmful. We also have an interactive Content Safety Studio that allows you to view, explore and try out sample code for detecting harmful content across different modalities. You can learn more from the link below. We cannot wait to witness the incredible applications and solutions our users will create using these state-of-the-art models. Explore SDK and CLI examples for foundation model in azureml-examples GitHub repo! SDK: azureml-examples/sdk/python/foundation-models/system at main · Azure/azureml-examples (github.com) CLI: azureml-examples/cli/foundation-models/system at main · Azure/azureml-examples (github.com) Learn more! Get started with new vision models in Azure AI Studio and Azure Machine Learning Sign up for Azure AI and start exploring vision-based models in the Azure Machine Learning model catalog. Announcing Foundation Models in Azure Machine Learning Explore documentation for the model catalog in Azure AI Learn more about generative AI in Azure Machine Learning Learn more about Azure AI Content Safety - Azure AI Content Safety – AI Content Moderation | Microsoft Azure Get started with model inferencing86KViews3likes3CommentsGPT-4 Turbo with Vision on Azure OpenAI Service

GPT-4 Turbo with Vision on Azure OpenAI service is coming soon to public preview. It can analyze images and provide textual responses to questions about them. It incorporates both natural language processing and visual understanding. This integration allows Azure users to benefit from Azure's reliable cloud infrastructure and OpenAI's advanced AI research.57KViews10likes6CommentsElevate Your LLM Applications to Production via LLMOps

Discover the Future of LLM Application Development! with Azure Machine Learning prompt flow. Dive into our latest blog, 'Elevate Your LLM Applications to Production via LLMOps,' where we unveil groundbreaking insights and tools to transform your AI development journey. Learn how to build, evaluate, and deploy LLM applications not just efficiently, but with newfound confidence. Get ready to explore the realm of possibilities in AI development – your next big leap starts here! #LLMOps #AIDevelopment #LLM applications30KViews0likes0CommentsAzure AI Speech launches Personal Voice in preview

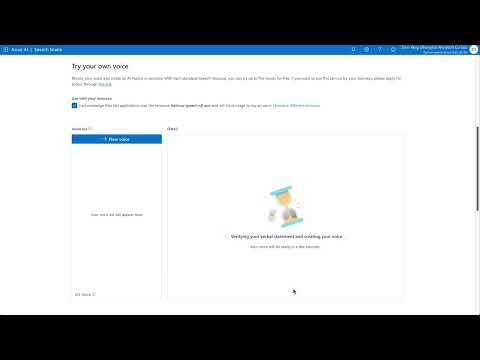

Today at Ignite 2023 conference, Microsoft is taking customization one step further with its new 'Personal Voice' feature. This innovation is specifically designed to enable customers to build apps that allow their users to easily create their own AI voice, resulting in a fully personalized voice experience. 27KViews6likes3Comments

27KViews6likes3CommentsAnnouncing the Public Preview of Integrated Vectorization in Azure AI Search

Announcing the Public Preview of Integrated Vectorization in Azure AI Search. Discover the game-changing feature designed to accelerate the vectorization development process, reduce maintenance tasks, and seamlessly integrate vectors into your applications.24KViews0likes5CommentsAnnouncing Azure confidential VMs with NVIDIA H100 Tensor Core GPUs in Preview

Today, we are excited to announce the gated preview of Azure confidential VMs with NVIDIA H100 Tensor core GPUs. These VMs are ideal for training, fine-tuning and serving popular open-source models, such as Stable Diffusion and its larger variants (SDXL, SSD…) and language models (Zephyr, Falcon, GPT2, MPT, Llama2. Wizard, Xwin).